Semantic Drone Dataset|无人机数据集|语义分析数据集

收藏github2024-06-20 更新2024-06-21 收录

下载链接:

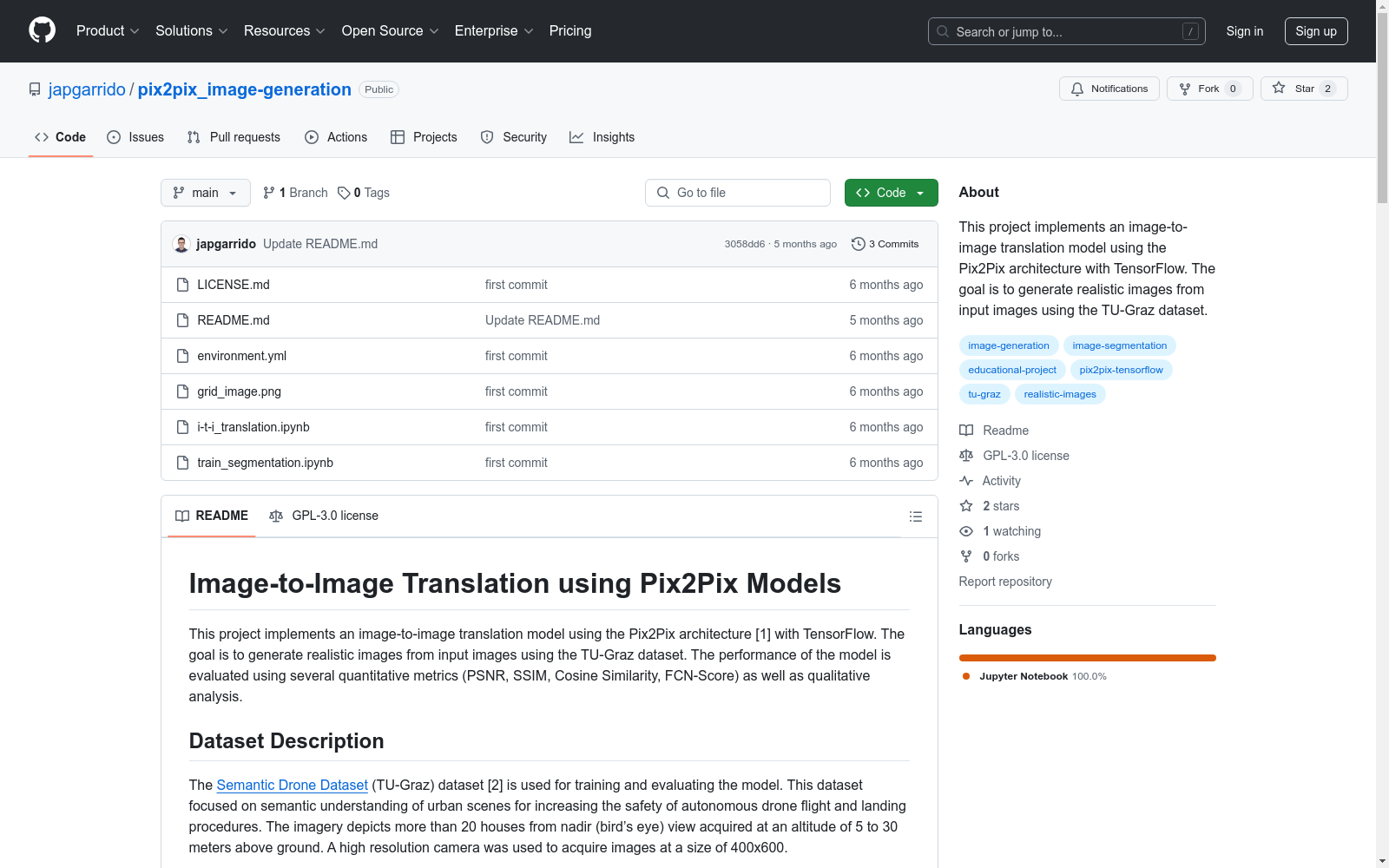

https://github.com/Angel3245/pix2pix_image-generation

下载链接

链接失效反馈资源简介:

该数据集专注于城市场景的语义理解,用于提高自主无人机飞行和着陆过程的安全性。数据集包含超过20个房屋的鸟瞰图,图像大小为400x600,由高分辨率相机在5至30米的高度拍摄。

This dataset focuses on the semantic understanding of urban scenes, aimed at enhancing the safety of autonomous drone navigation and landing processes. It comprises over 20 aerial views of houses, with image dimensions of 400x600 pixels, captured by high-resolution cameras at altitudes ranging from 5 to 30 meters.

创建时间:

2024-06-20

原始信息汇总

数据集描述

- 名称: Semantic Drone Dataset (TU-Graz)

- 来源: Semantic Drone Dataset

- 用途: 用于训练和评估图像到图像翻译模型,专注于城市场景的语义理解,以提高自主无人机飞行和着陆过程的安全性。

- 特点:

- 包含超过20栋房屋的鸟瞰图。

- 图像采集高度为5至30米。

- 使用高分辨率相机,图像尺寸为400x600。

评估指标

- PSNR: 峰值信噪比,用于衡量生成图像与真实图像的质量,PSNR值越高,图像质量越好。

- SSIM: 结构相似性指数,用于衡量生成图像与真实图像的相似性,考虑结构信息、亮度和对比度的变化。

- Cosine Similarity: 余弦相似度,通过计算两个图像特征向量之间的余弦角来衡量图像相似性,相似度越高,图像越相似。

- FCN-Score: 使用全卷积网络评估生成图像的分割性能,FCN-Score越高,分割质量越好。

- Qualitative Analysis: 定性分析,通过视觉检查生成图像的逼真度,包括与真实图像的并排比较和人类观察者的评估。

AI搜集汇总

数据集介绍

构建方式

在构建Semantic Drone Dataset时,研究团队采用了高分辨率相机,从无人机在5至30米高空拍摄的视角,捕捉了超过20栋房屋的鸟瞰图像。这些图像以400x600的分辨率记录,旨在为城市场景的语义理解提供丰富的视觉数据,从而提升自主无人机飞行和着陆过程的安全性。

特点

Semantic Drone Dataset的显著特点在于其高分辨率和特定视角的图像采集,这使得数据集在城市环境中的语义分析方面具有极高的应用价值。此外,该数据集还包含了多种量化评估指标(如PSNR、SSIM、Cosine Similarity和FCN-Score),这些指标为模型的性能评估提供了全面的参考。

使用方法

使用Semantic Drone Dataset时,用户首先需导航至项目目录并创建一个conda环境。随后,通过加载和预处理图像数据,用户可以训练Pix2Pix模型中的生成器和判别器,利用对抗损失和重构损失优化模型。训练过程中,模型会定期保存检查点以供后续评估。最终,通过PSNR、SSIM、Cosine Similarity和FCN-Score等指标,用户可以对生成的图像进行量化评估,并通过视觉检查进行定性分析。

背景与挑战

背景概述

Semantic Drone Dataset,由TU-Graz机构创建,专注于城市场景的语义理解,旨在提升无人机的自主飞行和着陆安全性。该数据集包含超过20栋房屋的鸟瞰图像,采集高度为5至30米,图像分辨率为400x600。其核心研究问题在于通过高分辨率图像实现对城市环境的精确语义分割,从而为无人机导航提供可靠数据支持。此数据集对无人机技术领域的影响深远,特别是在提升飞行安全性和环境感知能力方面。

当前挑战

Semantic Drone Dataset在构建过程中面临的主要挑战包括高分辨率图像的采集与处理,以及确保数据集的多样性和代表性。此外,该数据集在应用中的挑战在于如何有效地将图像转换为语义信息,以支持无人机的自主决策。具体挑战包括:1) 图像到图像转换模型的训练,需平衡生成图像的逼真度与语义准确性;2) 评估指标的选择,如PSNR、SSIM、Cosine Similarity和FCN-Score,需确保这些指标能全面反映模型的性能;3) 数据集的更新与扩展,以适应不断变化的城市环境和无人机技术的发展。

常用场景

经典使用场景

在无人机自主飞行与着陆过程中,Semantic Drone Dataset 被广泛应用于图像到图像的翻译任务。通过Pix2Pix模型,该数据集能够将输入的无人机视角图像转换为高分辨率的现实图像,从而提升无人机在复杂城市环境中的导航与着陆安全性。这一应用场景不仅优化了无人机的视觉感知能力,还为后续的图像处理与分析提供了坚实的基础。

衍生相关工作

基于Semantic Drone Dataset,研究者们开发了多种图像翻译与语义分割模型,如Pix2Pix、CycleGAN等,这些模型在无人机视觉感知与导航领域取得了显著成果。此外,该数据集还激发了大量关于无人机自主飞行与着陆的研究,推动了计算机视觉与机器人技术的交叉融合。这些衍生工作不仅丰富了学术研究的内容,还为无人机技术的实际应用提供了新的思路和方法。

数据集最近研究

最新研究方向

在无人机自主飞行与着陆安全领域,Semantic Drone Dataset的最新研究方向主要集中在图像到图像翻译技术的应用上。通过Pix2Pix模型,研究者们致力于将输入图像转换为高度逼真的输出图像,以提升无人机在复杂城市环境中的导航与着陆精度。这一研究不仅推动了图像处理技术的边界,还为无人机在实际应用中的安全性提供了新的保障。此外,该数据集的量化评估指标如PSNR、SSIM、Cosine Similarity和FCN-Score,以及定性分析方法,为模型的性能评估提供了全面而深入的视角,进一步促进了该领域的技术进步。

以上内容由AI搜集并总结生成